Automatic Speech Recognition (ASR) is one of those technologies you either love or hate. Sadly, a large number of businesses (mainly financial institutions) have decided it’s cheaper to get a computer to answer the telephone than to pay a human, and undoubtedly this generates the most hate. When they shift the burden of the human operator to the caller, they really don’t care how many times you have to repeat your account details because it’s costing them virtually nothing. However, it drives the customers crazy and eventually they will vote with their feet.

On the other hand, if you are driving a vehicle and want to activate a map without causing an accident, you may love it.

When it does not do what you want, it is tempting to assume that ASR falls woefully short of the human, yet there are circumstances where it often performs better. The human does much more than convert speech into text – the human extracts “meaning” and to do that applies many different knowledge sources to establish context. Computers are not bad at recognising human speech, they are simply not so good at understanding it – yet.

But that will change – and is changing. The question is, if you have an application for ASR, should you wait, or be like the banks and drive your customers nuts now?

Well perhaps surprisingly as someone who is a great evangelist of ASR, I would say that in “real time” applications, ASR should be deployed only in situations where it can be shown to be at least as effective as a human. What do I mean by real time? I mean, when a response is required pretty much as fast as a human listener might provide.

But what other applications for ASR are there? Essentially, speech-to-text transcription is not something that requires real-time response, and in many cases response times of days, weeks or even years are acceptable. Examples of this are dictation, and phone call transcription.

5 years ago when we first integrated VoIP phone calls into our Threads intelligent message hub, it became quickly apparent that to be able to search phone calls would be of major benefit to our business. After all, everyone was able to search emails to locate vital messages, so why should phone calls be any different? At that time, ASR systems were not anywhere as advanced as they are now, yet my knowledge of the subject told me that the words with the most information value were the easiest to recognise. This is because words with more complexity tend to have more specific meaning. For example, you would never search emails for “and” or “but” whereas you are far more likely to search for, say, “vacuum cleaner”. ”Vacuum cleaner” is phonetically more complex than “but”.

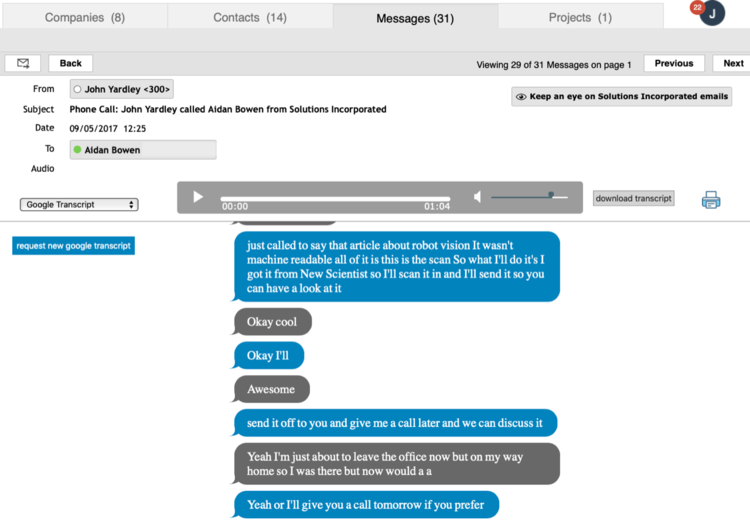

So we initially decided to simply allow searching for “keywords”. But by the time we were ready to deploy this, the performance of the ASR had improved to the extent that giving users a full transcription of a telephone call, while by no means perfect, provided a massively useful resource. It enable users not only to search for keywords but once they had found the conversation they were looking for, to scrub through the call visually and isolate the specific part they needed. It was nothing short of a revelation.

Now bear in mind that when you are searching for something, it’s unlikely you will want to search seconds after the call. This means that instead of having to apply fast ASR methods such as would be needed in an interactive situation, we could apply much better methods – which took longer and applied more “understanding” to the speech.

Once you do this though, you realise the value in transcribing as many historic calls as you can, going back as long as your business might reasonably need.

And here is the thing. Had we decided not to store our phone calls in Threads until we had the the ASR performance of a human, then we would have lost much vital data. But by storing them (importantly, without destroying any necessary information), we can re-apply the ASR at any time we wish in the future. In 10 years time, we may well achieve as good ASR transcription as does a human, maybe even better. But we cannot do that if we never stored the calls. Even in a period of a year, we have seen performance improvements on the same speech.

So even if you are not yet ready to deploy ASR, I cannot overemphasize the value of storing your calls as soon as you possibly can. If you wait until ASR is as good as a human, you could lose a really important resource – one that your competitors may have had the foresight to get benefit from.